Background

After writing Clean Architecture Demystified, which covered theoretical parts of Clean Architecture, it became apparent that question marks still remained as to how it's implemented practically. This resulted in two things, writing a Todo app built using Spring Boot, which I shared on Github, and this article that goes through its implementation.

Demo Todo app overview

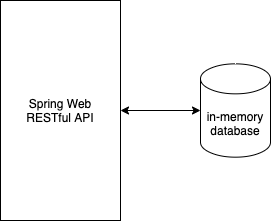

The demo app was built using Spring Boot and consisted of a RESTful API and an H2 in-memory database for persistence.

Screaming intent and purpose

One of the goals of Clean Architecture is for the source code to scream its intent and purpose. Below the project's package structure is shown.

- entities

- shared

- infrastructure

- persistence

- spring

- data_jpa

- usecase

- add_task_to_todo

- adapter

- infrastructure

- spring

- web

- browse_tasks_in_todo

- ...

- browse_todos

- ...

- create_todo

- ...

- remove_task_from_todo

- ...

- remove_todo

- ...

- update_task_in_todo

- ...

- update_todo

- ...

Entities

The highest level package is the entities package, which contains pure business rules and objects that has no dependencies outside of its own package. This package only contains application agnostic code i.e. code that doesn't change when application implementation details change. Its sole reason to change is business requirements.

Shared Infrastructure

The shared infrastructure package on the other hand, is the lowest level package which contains the shared application-specific persistence delivery mechanism. The code in the data_jpa package is Spring Data JPA-related, such as JPA Repositories and Hibernate entities. Please note! The Hibernate entities are not to be confused with entities in the entities package.

Usecase

The usecase package contains the use cases, which have all been given a separate sub-package. Each of these harbours all of the application-specific code for each use case. In Clean Architecture terminology this translates to Input/Output Boundary Interfaces, Request and Response Models, the Interactor implementation and any use case-specific Gateway interfaces. In this application there weren't any use case-specific Gateways, instead there was one Gateway that was shared by all of them. For this reason this code was put directly in the usecase package.

For each usecase package, the Interfaces & Adapters layer-specific code was put in the adapter sub-package. It holds the ViewModel and the Output Boundary interface implementation i.e. the Presenter.

Gathered in each usecase package, was also the Frameworks & Drivers layer-specific code in its own sub-package called infrastructure. To further enhance intuitive understanding of the project structure I created the sub-packages spring and web, as shown in the package structure above. In the web package I added Spring Web REST Controllers and MVC models.

Create Todo use case packages and files

This section shows all the files and packages that together form a use case. The create_todo example is identical to the rest of the use case packages, except that some lack a MVC model file, due to them not needing one. Packages are denoted in bold and files have the .java extension.

- create_todo/

- adapter/

- CreateTodoPresentation.java

- CreateTodoPresenter.java

- CreateTodoViewModel.java

- infrastructure/

- spring/

- web/

- CreateTodoMvcController.java

- CreateTodoMvcModel.java

- CreateTodoInteractorFactory.java

- CreateTodoInteractorMaker.java

- CreateTodoPresenterFactory.java

- CreateTodoPresenterMaker.java

- CreateTodoInteraction.java

- CreateTodoInteractor.java

- CreateTodoPresenter.java

- CreateTodoReqModel.java

- CreateTodoResModel.java

One thing I've picked up from Golang programming is that interfaces should preferably be small and their name should signify their purpose. For example in the "io" package there's an interface called Writer and another called Reader. The gist of it, is that if something writes then it's called a Writer. And if something reads, then it's a Reader. This semantic only works as long as the interfaces are small. After applying it to this project I ended up with the following standard.

- Interface: Presenter --> Implementation: Presentation

- Interface: Interactor --> Implementation: Interaction

- Interface: Maker --> Implementation: Factory

- Interface: Gatekeeper --> Implementation: Gateway

Now all the classes and interfaces should make sense in the above list except for one. The CreateTodoPresenter.java in the adapter package. This seems like a duplicate since there's already one in the use case package create_todo. The reason for a second Presenter interface is that the one in the use case package should be ViewModel-unaware, since it's the Output Boundary interface. Nonetheless, its implementation should produce a ViewModel and since it cannot be returned up the call chain through the Interactor to the REST controller, because this would require source-code dependencies to the ViewModel in both the Presenter and Interactor, it has to be exposed by a getter function.

As a side note, this is one of two reasons for requiring the four Factory/Maker files, because this means that the Presenter implementation has to be stateful due to it having a gettable VewModel field, and thus it cannot be auto wired by Spring as a singleton member of the REST controller. The second reason is due to the Dependency Rule, the Presenter should be Spring-unaware i.e. being unannotated.

Returning to the Presenter's getter function, to achieve such a getter function a second Presenter interface is needed that extends the one in the use case package. The actual implementation doesn't implement the use case version, instead it implements the extended in the adapter package. The PresenterMaker interface makes instances of the Presenter interface in the adapter package, and thus allowing the REST controller to get the ViewModel.

The below figure doesn't include the factories that the REST controller uses, but it shows how it's able to get the ViewModel from the Presenter. Please note that all source-code dependencies flow from low-level to high-level layers, in accordance with the Dependency Rule.

Constituents and responsibilities

In this section objects of interest are mentioned along with an outline of their responsibilities.

- Clean Architecture objects setup. Instantiates and wires together the Interactor and Presenter with help from the Spring IoC container.

- Converts the Spring Web MVC Model to a Request Model

- Invokes the Interactor's use case method

- Logs errors if any when invoking the Interactor's use case method

- Gets the ViewModel from the Presenter

- Passes the ViewModel to Spring Web for returning a HTTP Response

- Data transfer object that passes required data to the REST controller.

- (Optional) Sanity checks of data and technical data validation, e.g. valid e-mail addresses, valid phone numbers etc.

- Orchestration of calls and checks that together make up a use case. Calls Entities and Gateways.

- Application-specific business rules, e.g. check for maximum/minimum limits such as API throttling, demo-version limitations etc.

- Construction of the Response Model

- Passing of the Response Model to the Presenter

- Construction of the ViewModel

- Converting data from the format most suitable to the application to the most suitable for the users of the application. Examples of this is localised time formatting and money presentation.

Controller testability & Dependency Injection

When using Spring there are a number of ways to define dependencies. The first is in XML, which has the benefit of leaving the source-code completely Spring-unaware i.e. no Spring dependencies in the source-code. The second option is to utilise annotations, which creates source-code dependencies to the Spring framework. The third option is to leverage JavaConfig files which define beans, but to use them it's required to have a source-code reference to the Spring ApplicationContext, which also ties your code to the Spring framework.

In this project I chose to use annotation-based dependency configuration. This choice meant having source-code dependencies to the Spring framework. Clean Architecture's Dependency Rule, which I explain in great detail in this article, limits where it's possible to put annotations to the Frameworks & Drivers layer, which translates to code in my infrastructure packages.

I wanted to leverage the Spring IoC container for the REST controllers' dependencies so that it would be possible to write tests for them. Its dependencies are the Presenter and the Interactor, but due to the Dependency Rule it wasn't a choice to annotate them. After giving it some more thought, I realised that even if they were annotated it wouldn't work as I'd like. This is because of the REST controller being instantiated as a singleton by the Spring framework whereas the Presenter had to be of the type prototype, i.e. for each HTTP request a new instance of the Presenter has to be instantiated. This is known as the scoped bean injection problem.

My solution was to introduce an abstract PresenterFactory and an abstract InteractorFactory in the infrastructure package. The factories would be stateless and would therefore fit well to be instantiated as singletons by Spring. These would be used in the REST controllers' methods to construct instances of Presenters and Interactors. Note however, that they output interfaces, not concrete classes. The factory implementations were annotated for automatic wiring in the REST controllers.

As can be seen in the above image. The REST controller and its dependencies are annotated, which means that Spring will take care of the instantiation of the controller and its dependencies.

HTTP request example

Below is an example of what happens when the app handles an HTTP request.

To clarify (20) in the figure above, the REST controller has a reference to the Presenter from which it gets the ViewModel by calling its getViewModel() method. It then puts together a ResponseEntity object from the ViewModel.

Analysis

Implementation

Deferred details

The thing that struck me as I was coding, was that I coded the application core first i.e. the Interactor, Request/Response Models, Presenter and the Persistence Gateway. In other words, I was focusing on the business related code first and didn't have to worry about how my entities were to be persisted to a database or to which type of database. Nor did I have to consider where the user input came from, if it was a REST API or CLI app. I could focus entirely on the use case at hand, from as close to a business perspective as possible. The delivery mechanisms such as the UI and database were details I could defer till last.

Code structure standard

Another thing was that it felt nice to have a blueprint to follow when coding. I knew in advance which classes/interfaces/packages that needed to be created. It was their content that needed a bit of thought however. Following a blueprint or recipe, I had in fact standardised the code structure which has long-term maintenance implications. When a new developer joins the development team, he/she only has to study and understand one of the use case implementations to know the whole structure of the application. Given that everyone involved adheres to the standard, as time passes the source code will remain something easily navigated and easily learned.

Single-Responsibility-Principle

The end result was a structured code mass where each class/interface had explicit responsibilities, most of which had few responsibilities, which is in line with the Single-Responsibility-Principle (SRP). The other side of the SRP coin, is that each use case consisted of a whopping 14 java files, out of which 5 were interfaces.

Independent component decomposition

It should be noted, that this Clean Architecture implementation wouldn't score full marks if graded, since it's not decomposed into independent components (such as JAR- or DLL-files), which in addition should be independently deployable. To achieve independent deployability something like OSGi could be used. Each use case could e.g. be separated into two or more JAR-files. One option would be to have the Entities and Use case layers in one, and the Interface & Adapters and the Frameworks & Drivers layers in another. These could then be patched and upgraded independently without requiring a reboot of the application since OSGi supports hot-swappable JAR-files.

Boilerplate hell

Implementing the use cases, it felt like a pain to repetitively create the same classes/interfaces/packages. To reduce implementation time, a code generation tool could serve to mitigate the cost of writing the boilerplate code.

Data validation

The question is where to put validation of user input data in your code? In this project it felt natural to leverage Jakarta bean validation, which meant putting annotations in the MVC models. In this way the inner application i.e. the Interactor and Entities were shielded from invalid data. This meant that the latter could focus on checking business rules constraints instead, which provided a clean and intuitive separation of concerns.

Screaming intent and purpose

One nice feature about my implementation is that its package structure screams its purpose and intent. It's easy for a developer who hasn't seen the project to reason about what the application does, without opening a single file of code. In this case, a Todo app.

Last remarks on the implementation of the code

I do believe that the structure of this demo app is a good starting point from which the project's cleanness can improve over time. There's a good separation of concerns where each object has limited responsibility and each part of the application is testable.

All great things start out small and in the case of software development it's wise to start with a monolithic architecture. That said, there are many ways to architect one. An advantage of the design of this demo app is that practically all the code for each use case is neatly gathered in one parent package. Should the day come when certain parts of the application needs to scale to meet changed usage patterns, it's trivial to split the monolith into separate processes and scale them separately.

Testability

A source-code mass without tests means that any change to the code might introduce adverse behaviour i.e. bugs. Tests are vital to achieving rapid and agile development, since the development teams can with some degree of confidence make changes to a system, making it easier to move forward. Making changes to source-code without tests is to boldly go where no developer has gone before.

In this demo app, each part of the code is testable. The REST controller, the Interactor, the Presenter, the factories for the latter two, they're all testable. In conclusion, in implementing Clean Architecture I've created a highly testable code mass.

Maintenance

Having a coding standard, which is enforced, results in the opposite of spaghetti code, instead the result is predictable, navigable and something which has fewer ad-hoc implementations to learn. Over time, given that the code mass expands, this is something that gets increasingly important. Bug hunting and how to add new features to the code mass, are both facilitated by this.

A coding standard is the foundation on which new features can be added. Clean Architecture however, does not equate to a standard. It's more like guidelines to follow, but at its core it's about the Dependency Rule and separation of concerns.

Scalability

When it comes to scalability, I'd say that Clean Architecture does nothing to improve, nor nothing to worsen the ability of a system to scale. It's scalability-independent. To achieve massive scale, one would adopt the micro-services deployment model, where each service could be implemented using any architecture, not just Clean Architecture.

Deployability

As stated in the section on Scalability, in the case of micro-services, the deployability of an application is independent from any architecture chosen to implement an application.

Segmenting a code mass into JARs can mean two things for deployments. If using hot-swappable JARs, deployments are extremely flexible allowing the application to continue running while swapping JARs. Development teams can then both independently develop and deploy JARs. When not using hot-swappable JARs, there's the option to either deploy a fat or thin JAR, neither of which offer independent deployability from a JAR-perspective. Given a code mass that consists of several JARs, which might be independently developed by different teams, a top-level project using these has to be compiled and packaged into either a fat or thin JAR to deploy.

Needless to say, Clean Architecture is not a requirement for deploying applications. Nevertheless, due to the Dependency Rule and separation of concerns, it can facilitate segmenting an application into JARs, which can at least be independently developed. In conclusion, Clean Architecture is more about independent development than deployability.

Closing thoughts

What does Clean Architecture bring to the table? Why would you use it? And for which projects? This section tries to reason about this.

What does Clean Architecture bring to the table?

- Maintainability, this is a consequence of having testable code and a coding standard. The latter cannot be attributed to Clean Architecture, since it's a set of general guidelines, not a standard. Nonetheless, a standard can take form from its guidelines.

- Testability, adhering to the core of Clean Architecture, which is the Dependency Rule and separation of concerns, the resulting code will be testable, which is the foundation upon which long-term code masses should be built.

- Independent development, again, adhering to the core of Clean Architecture it's easy to segment the code mass into independently developable components such as JAR-files in Java. This enables multiple development teams to work in parallel, but it's also another form of the separation of concerns concept, which makes large problems more manageable by splitting them into smaller problems.

- Deferred and flexible details, it's possible to write an application completely without delivery mechanisms. This might be valuable in the beginning of new development projects where decisions concerning databases and UIs have not been made. Leveraging Clean Architecture would allow the development to commence even in the absence of these. By flexible details I mean replaceable, e.g. with minimal changes be able to switch from a local MySQL persistence store to AWS DynamoDB when moving to the cloud.

Which factors are Clean Architecture-aligned?

From point (1) and (2) in the above list, it can be deduced that one of the main factors for Clean Architecture is code mass life expectancy. i.e. for how long the code mass will be maintained.

Point (3) suggests another factor to be code mass size, i.e. the greater the code mass, the greater the need for segmentation to facilitate independent development of each segment. From point (2) the same factor can also be deduced. The greater the code mass size, the greater the need will be for tests to give developers the confidence to be able to add new features.

Micro-services

The essence of micro-services is that they are small independent processes. As such, it's unlikely that their code masses will ever get large. When it comes to life expectancy, the same conclusion cannot be made. Overall, micro-services are not a natural fit for Clean Architecture, but depending on how test-heavy the source-code is, it might offer some value to the coder.

Comments

Post a Comment